Navigating the Challenges of Named CAP Schemas in SAP BTP

Lessons Learned from a Printing Solution Project

In our latest project, we faced an interesting challenge while developing a printing solution on SAP Business Technology Platform (BTP) using the Cloud Application Programming (CAP) model. The requirement was to grant external parties ODBC access to the SAP HANA Cloud schema generated by CAP. To make the schema easily identifiable, we wanted to assign a user-friendly name to it.

Note:

The images and source code snippets provided in this blog are derived from a

minimalistic

application built on the SAP BTP trial landscape. This setup was specifically designed

for

demonstration purposes and does not reflect the actual production environment of the

project. Certain details have been abstracted or modified for security and

confidentiality

reasons.

Overview

This blog post will walk you through the key learnings and solutions we implemented to achieve this, focusing on three main points:

- Explicitly Naming a CAP Schema

- Overcoming Schema Name Conflicts with Extension Descriptors

- Automating Deployment Across Environments

- Granting External Access: Creating a Design-Time Role

Explicitly Naming a CAP Schema

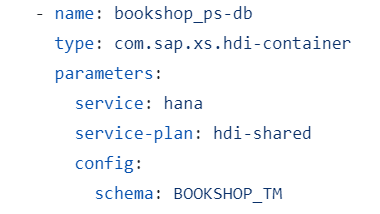

To assign a fixed, user-friendly name to the CAP schema, we made modifications to the mta.yaml file. Here's how it was configured:

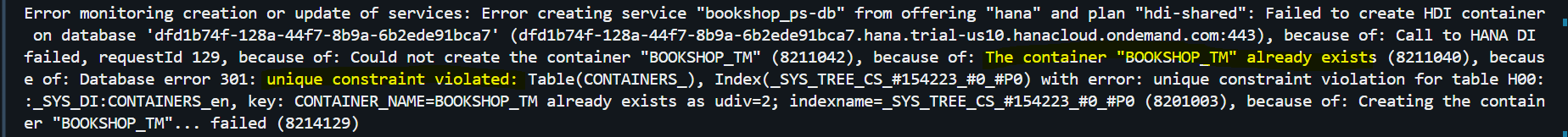

This configuration allowed us to deploy the application in the development environment without issues. However, we encountered a problem when deploying to the QA environment. Since both environments shared the same HANA Cloud instance (through instance mapping), the schema name BOOKSHOP_TM could only exist once. Attempting to deploy the same schema name in QA resulted in a conflict, throwing the following error:

Overcoming Schema Name Conflicts with Extension Descriptors

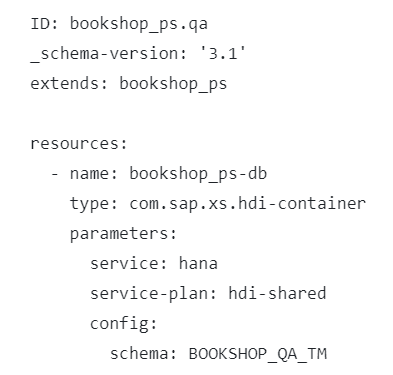

To resolve this, we created an extension descriptor file for the QA environment, which overrides the configuration in the mta.yaml. This allows us to use a different schema name in QA while maintaining a consistent setup across environments.

Here’s an example of the extensions/qa.mtaext file:

With this file, the deployment can now be successfully executed in QA using the following command:

cf deploy mta_archives/bookshop_ps_1.0.0.mtar -e extensions/qa.mtaext

Automating Deployment Across Environments

Once the manual deployment was tested and validated, we moved on to automating the process. Automating deployment in a classical staging landscape (Dev, QA, Prod) requires careful management of environment-specific configurations.

Although I won’t delve into the general setup of a SAP Continuous Integration and Delivery (CI/CD) job, you can find detailed tutorials and official documentation here:

- Tutorial: Get Started with an SAP Cloud Application Programming Model Project in SAP Continuous Integration and Delivery

- Tutorial: Configure and Run a Predefined SAP Continuous Integration and Delivery (CI/CD) Pipeline

- Official Documentation

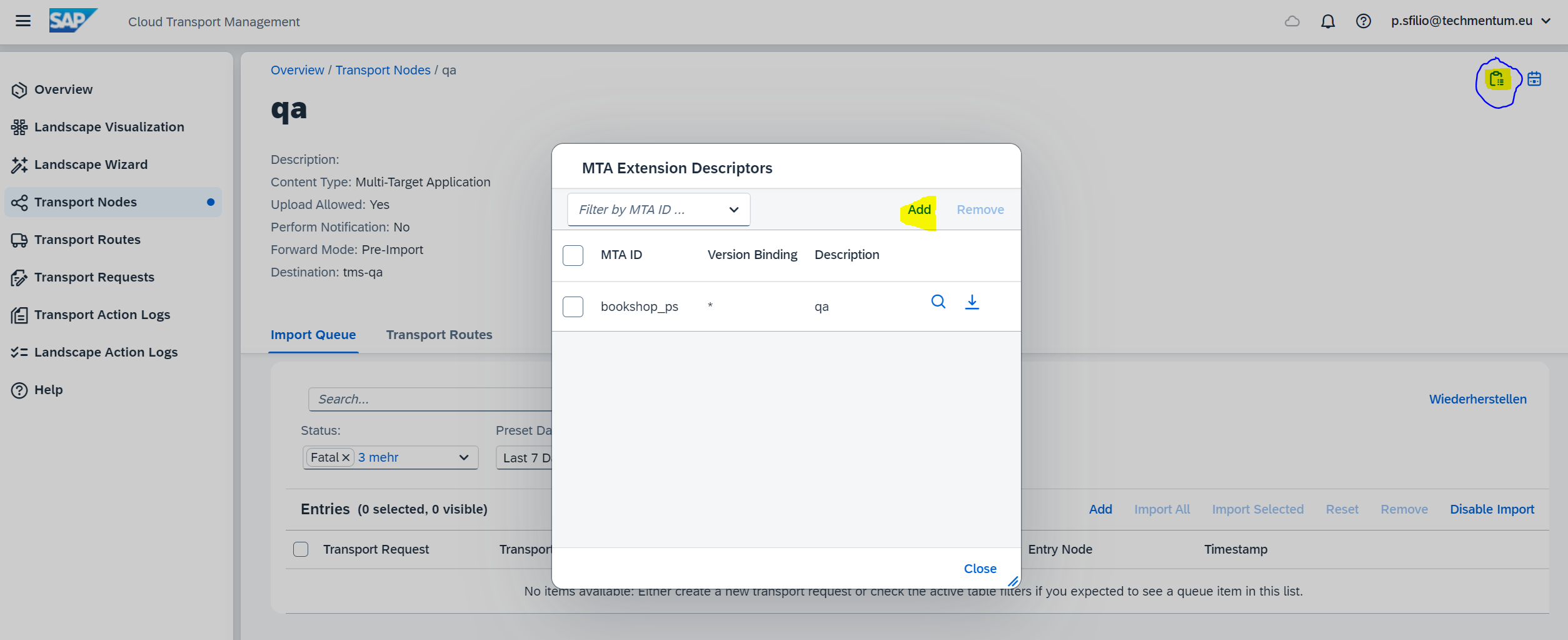

The key to our setup was using SAP Cloud Transport Management to manage these environment-specific configurations. We added the extension descriptor files for QA and PROD directly in the Cloud Transport Management service, ensuring that the correct schema name was used depending on the environment. Essentially, this setup involved having one CI/CD job for Dev, which then pushed the deployment to the QA import queue (of the Cloud Transport Management Service) with the appropriate configurations.

Here you can see how to upload the extension descriptor file for the QA node:

Granting External Access: Creating a Design-Time Role

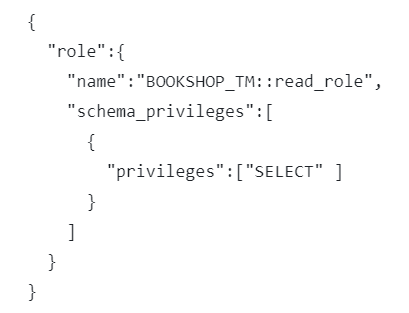

Finally, to grant external parties access to the schema, we created a design-time role. This role was defined in the db/src/roles folder and named ReadOnlyAccess.hdbrole file as follows:

Notably, we omitted the schema property, which is optional. By doing so, the current schema name of the CAP data model is used automatically, allowing us to reuse this role across different environments. This streamlined our role management, as the role name didn’t need to be unique.

It's worth noting that CAP generates default access roles for HDI containers. In our case, we created a custom role because the default roles didn’t meet our specific requirement for read-only access. For more details on modifying default roles, refer to:

Conclusion

This project provided valuable insights into handling named CAP schemas in a multi-environment SAP BTP landscape. By explicitly naming schemas, leveraging extension descriptor files, and automating deployments via SAP CI/CD, we were able to meet our requirements efficiently. Additionally, creating custom design-time roles ensured that external parties could securely access the HANA Cloud schema.

The key takeaway is the importance of flexible and environment-aware configuration management in CAP projects, especially when dealing with shared resources across multiple environments. By incorporating these strategies, you can achieve a robust and scalable deployment pipeline for your SAP BTP applications.

Note:

An alternative solution to managing explicit schema names could have involved using generic parameters

like ${org} or ${space} in the mta.yaml files. These placeholders are resolved at

design-time, offering a flexible way to handle schema names across different environments. More details

can be found here: Parameters and Properties.